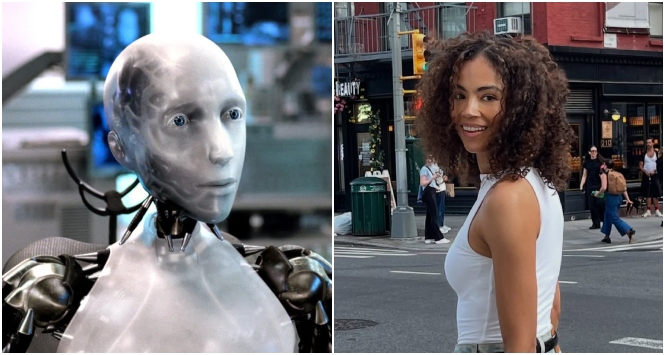

In a bold and thought-provoking statement that’s igniting conversation across the tech community, futurist Sinéad Bovell has raised the alarm on what she believes is OpenAI’s true intent behind its much-hyped Sora app — and it’s not what most people think.

While the app has been marketed as a platform for AI-generated videos — a creative tool for users to prompt, generate, and share short clips — Bovell contends that Sora isn’t about social media at all. In a viral Instagram post and accompanying video, she called it “a data pipeline for teaching robots how to see.”

“Sora isn’t about building a TikTok clone no one asked for,” Bovell wrote. “It’s about training our behavior — and training robotic agents.”

Her remarks tap into a growing unease in the AI ethics space: that public-facing “fun” tools often serve deeper technological ambitions — collecting vast datasets to power machine learning models that extend far beyond user entertainment.

A Futurist’s Warning

Bovell, a well-known AI ethics advocate and founder of WAYE (Weekly Advice for Young Entrepreneurs), has long warned about the hidden costs of innovation. In her recent commentary, she draws parallels between OpenAI’s rollout of Sora and Apple’s earlier behavioral conditioning through Photo Booth — a tool that, at the time, seemed purely playful.

“Apple taught us to get comfortable seeing ourselves on camera,” Bovell explained in her video. “That was the stepping stone to FaceTime, Instagram, and a world built around video-first communication. Sora is doing the same — just for AI.”

The implication? By normalizing AI-generated visual creation, OpenAI is training humans to interact with — and trust — synthetic video as part of daily life. In the process, it’s also gathering crucial visual data to help robotic systems learn about the physical world.

The “Robot Connection”

Digging into the fine print, Bovell points to language on the Sora website, where terms like “world simulators” and “robotic agents” appear repeatedly. The term “social platform,” notably, does not.

“World simulators,” she explains, are virtual environments that allow AI-driven robots to experiment, make mistakes, and learn — without physical consequences.

But for those simulations to be effective, they must mirror real-world physics with precision: how light moves, how water flows, how shadows form.

That’s where the data comes in. Every AI-generated video, every prompt, and every edit helps improve these simulations — effectively teaching AI systems to interpret depth, movement, and causality.

“It’s not just about what humans are creating,” Bovell said. “It’s about what AI learns from the way we create.”

A New Ethical Crossroads

Bovell’s critique comes amid an intensifying debate about AI’s ethical boundaries. If she’s right, Sora represents a subtle but seismic shift — from AI observing humans, to humans unconsciously training AI.

This raises profound questions about informed consent and data use transparency. Are users aware that their creative outputs could be fueling autonomous systems capable of perceiving and acting in the physical world? And who controls that knowledge — or the machines that result from it?

Ethicists have warned that the line between creative engagement and behavioral conditioning is blurring fast. If Sora’s true purpose is indeed to model human interactions and visual environments for robotics, it may signal a future where “fun” is just another word for “function.”

The Bigger Picture

OpenAI, for its part, has positioned Sora as a step toward “AI systems that understand and interact with the world,” though it has stopped short of calling it a robotics training tool. Yet Bovell’s analysis suggests that such phrasing isn’t accidental — it’s a strategic framing.

In her view, the public fixation on Sora as a social app may be missing the forest for the trees. What’s really unfolding, she argues, is a rehearsal for the next generation of AI — one that doesn’t just generate images, but navigates the world we live in.

“Everyone’s talking about social media,” Bovell concluded. “But this is about something much bigger. It’s about how we teach machines to see — and, eventually, to move.”